2024-10-18

One of the things that I’ve noticed about AI tools is that they’ve changed the way that I think about the role of intelligence in success.

In 2018, if you had sat me down and pressed me on the top qualities required to be successful, I probably would’ve had intelligence first. The ability to figure out the answer, it seemed to me, was probably the most important single quality to have if you could only have one.

In a post GPT-4 world, though, I’m no longer sure this is true. When I think about my kids and the qualities they need to develop, yes, I want a minimum level of intelligence, but I’m more interested in curiosity, initiative, earnestness, industriousness, judgement / taste, courage, and playfulness.

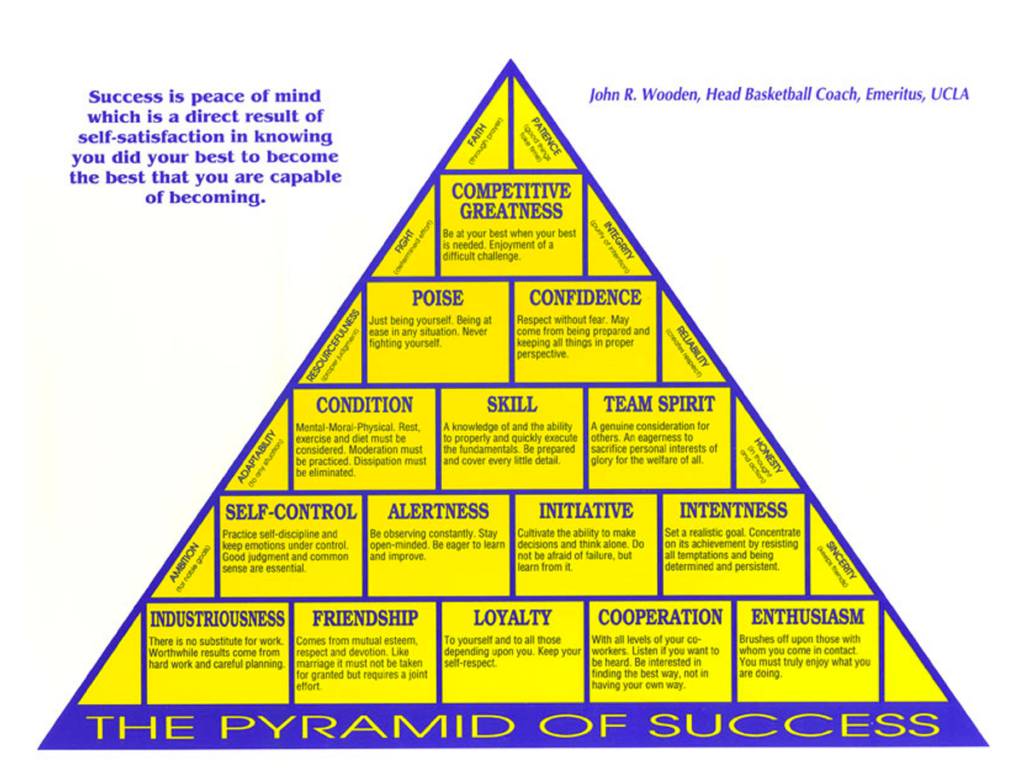

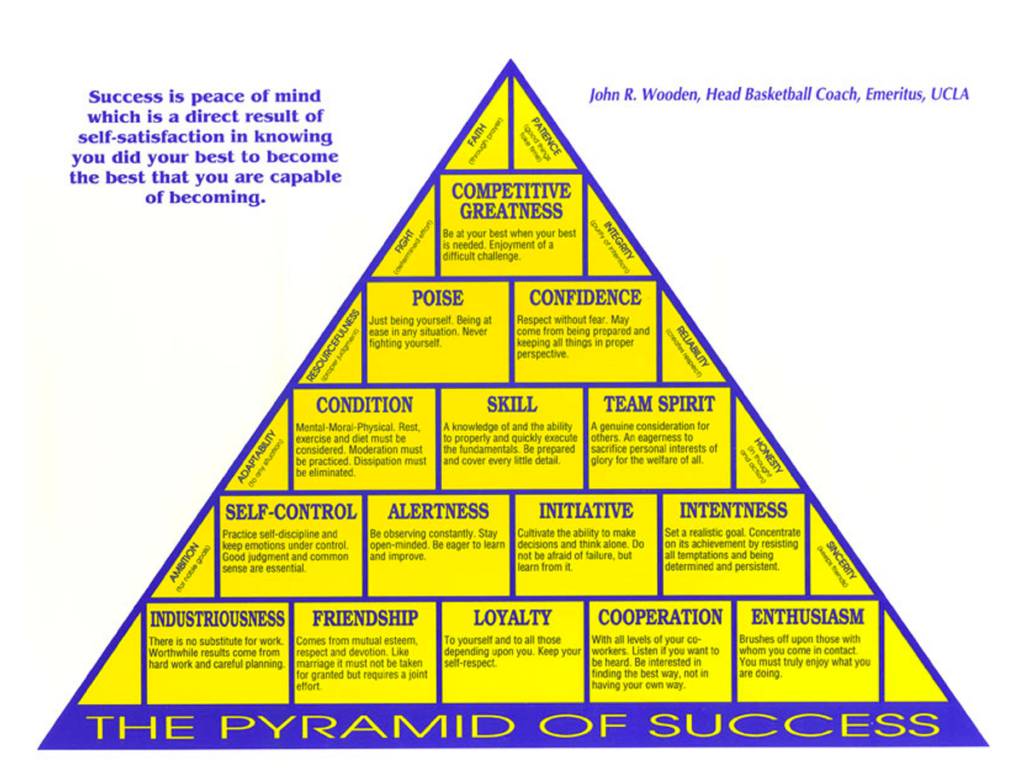

I’m struck by how many of these qualities are sitting in John Wooden’s pyramid of success.

Perhaps I was overrating the role of intelligence all along. Now with this frame, when I think about the most successful people that I’ve observed up close, alongside intelligence and the ability to process information is a whole lot of earnestness and industriousness.

Looking ahead, it seems to me that what is increasingly scarce and valuable isn’t the ability to breakdown what needs to be done, but the ability to get up off the couch and go do it. To consider the result that you get, and then go try again. To create trust with others so they help or at least don't hinder your progress. When I think about preparing for the world of the future, I think about thing a design studio, a Montessori classroom, or a basketball team.

2024-10-11

Things I learned: “the Milky Way builds between two and six sun-size stars a year.” Quanta Magazine.

My friend Alex Komoroske on Lenny’s Podcast. Alex is in the top 5 most influential people on my career in the past 5 years.

Henrik Karlson on how Jesuits and Montessori schools teach and scale culture.

The Swiss border is changing due to climate change.

“But the truth is that kids are more like artificial neural networks — they’re at a subtly different point in mind-space, they’re good and bad at different things than adults are good and bad at.” The Psmiths.

The story of how Dr. Zhivago got published. One of the most haunting books I’ve ever read. A reminder that civilization can collapse before your eyes.

How Jason Crawford chooses what to work on.

Speed Matters. “Being 10x faster also changes the kinds of projects that are worth doing.”

2024-10-04

How to make millions as a professional whistleblower. What a weird and interesting career path.

It’s time to talk about America’s disorder problem. One of the things that stood out most to me when moving back to the US from Switzerland was the amount of disorder that we tolerated as a society. This tolerance for disorder might not be entirely bad — America is nothing without its weirdos — but I’m not sure we realize the degree to which it is a choice.

Inventing on principle. Fantastic and through provoking talk about what motivates innovation. It has me wondering what principles I can commit to in this way.

How to succeed at Mr. Beast Productions. Includes a great 101 description of how YouTube’s algorithim works + a lot of tenacity.

How I failed. The CEO of O’Reilly Media talks candidly about the biggest lessons he’s learned along the way. Rare to get this much candor in one of these.

Meta smart glasses lead to real time doxxing. I don’t see anyway we can expect to unrecognized in the future. Better to accept it.

2024-09-09

How the psychiatric narrative hinders those who hear voices | Aeon Essays - an exploration of the “Targeted Individual” community, people who hear voices in their heads. Weird, wild, and a bit scary.

How to beat AI at Go - humans are able to beat the best AIs at Go by finding failure cases they aren’t prepared for. This is the future of warfare.

Palmer Lucky profile: such a great reminder that anything is possible with hard work and determination. Similarly, Casey Handmer on how entrepreneurship has changed the way he thinks.